PV 回收策略

当用户不再使用其存储卷时,他们可以从 API 中将 PVC 对象删除,从而允许 该资源被回收再利用。PersistentVolume 对象的回收策略告诉集群,当其被 从申领中释放时如何处理该数据卷。 目前,数据卷可以被 Retained(保留)、Recycled(回收,Recycle 已被废弃)或 Deleted(删除)。

保留(Retain)

回收策略 Retain 使得用户可以手动回收资源。当 PersistentVolumeClaim 对象 被删除时,PersistentVolume 卷仍然存在,对应的数据卷被视为”已释放(released)”。 由于卷上仍然存在这前一申领人的数据,该卷还不能用于其他申领。 管理员可以通过下面的步骤来手动回收该卷:

- 删除 PersistentVolume 对象。与之相关的、位于外部基础设施中的存储资产 (例如 AWS EBS、GCE PD、Azure Disk 或 Cinder 卷)在 PV 删除之后仍然存在。

- 根据情况,手动清除所关联的存储资产上的数据。

- 手动删除所关联的存储资产;如果你希望重用该存储资产,可以基于存储资产的 定义创建新的 PersistentVolume 卷对象。

删除(Delete)

对于支持 Delete 回收策略的卷插件,删除动作会将 PersistentVolume 对象从 Kubernetes 中移除,同时也会从外部基础设施(如 AWS EBS、GCE PD、Azure Disk 或 Cinder 卷)中移除所关联的存储资产。 动态供应的卷会继承其 StorageClass 中设置的回收策略,该策略默认 为 Delete。 管理员需要根据用户的期望来配置 StorageClass;否则 PV 卷被创建之后必须要被 编辑或者修补。参阅更改 PV 卷的回收策略.

参考:https://kubernetes.io/zh/docs/concepts/storage/persistent-volumes/#reclaiming

[root@k8s-offline-master01 yaml]# kubectl get pvc -n zcbus01

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

datadir-zk-0 Bound data-kafka-zookeeper-zcbus01-1 5Gi RWO zk-storage 65s

datadir-zk-1 Bound data-kafka-zookeeper-zcbus01-0 5Gi RWO zk-storage 49s

datadir-zk-2 Bound data-kafka-zookeeper-zcbus01-2 5Gi RWO zk-storage 30s

[root@k8s-offline-master01 yaml]#

[root@k8s-offline-master01 yaml]# kubectl get pv -n zcbus01

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

data-kafka-zookeeper-zcbus01-0 5Gi RWO Retain Bound zcbus01/datadir-zk-1 zk-storage 68s

data-kafka-zookeeper-zcbus01-1 5Gi RWO Retain Bound zcbus01/datadir-zk-0 zk-storage 68s

data-kafka-zookeeper-zcbus01-2 5Gi RWO Retain Bound zcbus01/datadir-zk-2 zk-storage 68s通过命令查看 pv 和 pvc yaml 配置

pv

[root@k8s-offline-master01 yaml]# kubectl get pv data-kafka-zookeeper-zcbus01-0 -oyaml

apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolume","metadata":{"annotations":{},"name":"data-kafka-zookeeper-zcbus01-0"},"spec":{"accessModes":["ReadWriteOnce"],"capacity":{"storage":"5Gi"},"local":{"path":"/data/zcbus01/zk/zkdata-01"},"nodeAffinity":{"required":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"kubernetes.io/hostname","operator":"In","values":["192.168.0.105"]}]}]}},"persistentVolumeReclaimPolicy":"Retain","storageClassName":"zk-storage","volumeMode":"Filesystem"}}

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2021-10-31T23:10:22Z"

finalizers:

- kubernetes.io/pv-protection

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:status:

f:phase: {}

manager: kube-controller-manager

operation: Update

time: "2021-10-31T23:10:22Z"

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:spec:

f:accessModes: {}

f:capacity:

.: {}

f:storage: {}

f:local:

.: {}

f:path: {}

f:nodeAffinity:

.: {}

f:required:

.: {}

f:nodeSelectorTerms: {}

f:persistentVolumeReclaimPolicy: {}

f:storageClassName: {}

f:volumeMode: {}

manager: kubectl

operation: Update

time: "2021-10-31T23:10:22Z"

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:pv.kubernetes.io/bound-by-controller: {}

f:spec:

f:claimRef:

.: {}

f:apiVersion: {}

f:kind: {}

f:name: {}

f:namespace: {}

f:resourceVersion: {}

f:uid: {}

manager: kube-scheduler

operation: Update

time: "2021-10-31T23:10:38Z"

name: data-kafka-zookeeper-zcbus01-0

resourceVersion: "2391597"

selfLink: /api/v1/persistentvolumes/data-kafka-zookeeper-zcbus01-0

uid: ae6d652c-5315-4ff4-8d5b-fd3d69824319

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 5Gi

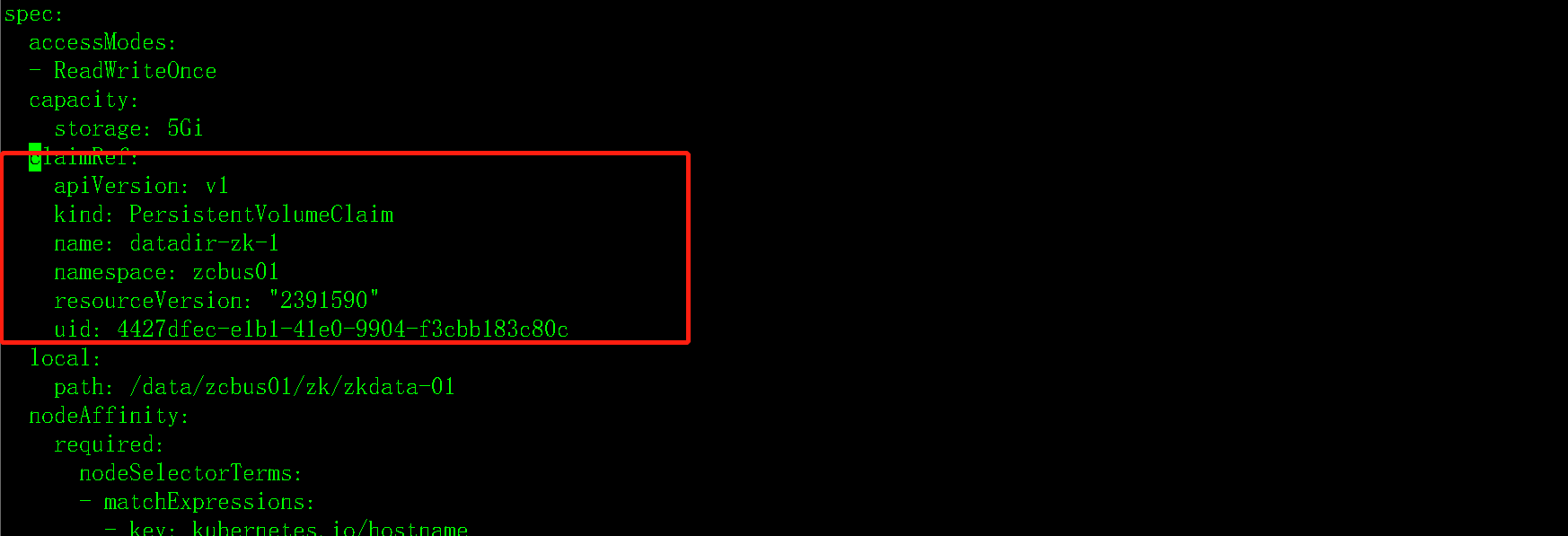

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: datadir-zk-1

namespace: zcbus01

resourceVersion: "2391590"

uid: 4427dfec-e1b1-41e0-9904-f3cbb183c80c

local:

path: /data/zcbus01/zk/zkdata-01

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.0.105

persistentVolumeReclaimPolicy: Retain

storageClassName: zk-storage

volumeMode: Filesystem

status:

phase: Bound测试删除 pvc

[root@k8s-offline-master01 yaml]# kubectl get pvc -n zcbus01

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

datadir-zk-0 Bound data-kafka-zookeeper-zcbus01-1 5Gi RWO zk-storage 3m32s

datadir-zk-1 Bound data-kafka-zookeeper-zcbus01-0 5Gi RWO zk-storage 3m16s

datadir-zk-2 Bound data-kafka-zookeeper-zcbus01-2 5Gi RWO zk-storage 2m57s

[root@k8s-offline-master01 yaml]# kubectl delete pvc datadir-zk-2 -n zcbus01

persistentvolumeclaim "datadir-zk-2" deleted

[root@k8s-offline-master01 yaml]# kubectl delete pvc datadir-zk-0 -n zcbus01

persistentvolumeclaim "datadir-zk-0" deleted

[root@k8s-offline-master01 yaml]# kubectl delete pvc datadir-zk-1 -n zcbus01

persistentvolumeclaim "datadir-zk-1" deleted

[root@k8s-offline-master01 yaml]# kubectl get pvc -n zcbus01

No resources found in zcbus01 namespace.

[root@k8s-offline-master01 yaml]# kubectl get pv -n zcbus01

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

data-kafka-zookeeper-zcbus01-0 5Gi RWO Retain Released zcbus01/datadir-zk-1 zk-storage 4m32s

data-kafka-zookeeper-zcbus01-1 5Gi RWO Retain Released zcbus01/datadir-zk-0 zk-storage 4m32s

data-kafka-zookeeper-zcbus01-2 5Gi RWO Retain Released zcbus01/datadir-zk-2 zk-storage 4m32s因为 pv 的 persistentVolumeReclaimPolicy 属性为 Retain,在删除 pvc 后 pv,会自动保留,所以 pv 状态变为 Released。

为了 pv 可以重新被挂载,需要执行 kubectl edit pv data-kafka-zookeeper-zcbus01-1 命令,清理 spec.claimRef 中的内容,以使 pv 成为可用状态。

清理之后,查看PV状态如下:

[root@k8s-offline-master01 yaml]# kubectl get pv -n zcbus01

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

data-kafka-zookeeper-zcbus01-0 5Gi RWO Retain Available zk-storage 6m32s

data-kafka-zookeeper-zcbus01-1 5Gi RWO Retain Released zcbus01/datadir-zk-0 zk-storage 6m32s

data-kafka-zookeeper-zcbus01-2 5Gi RWO Retain Released zcbus01/datadir-zk-2 zk-storage 6m32s

[root@k8s-offline-master01 yaml]# kubectl edit pv data-kafka-zookeeper-zcbus01-1

[root@k8s-offline-master01 yaml]# kubectl edit pv data-kafka-zookeeper-zcbus01-2

[root@k8s-offline-master01 yaml]# kubectl get pv -n zcbus01

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

data-kafka-zookeeper-zcbus01-0 5Gi RWO Retain Available zk-storage 7m34s

data-kafka-zookeeper-zcbus01-1 5Gi RWO Retain Available zk-storage 7m34s

data-kafka-zookeeper-zcbus01-2 5Gi RWO Retain Available zk-storage 7m34s此时PV可以被重复挂在使用了